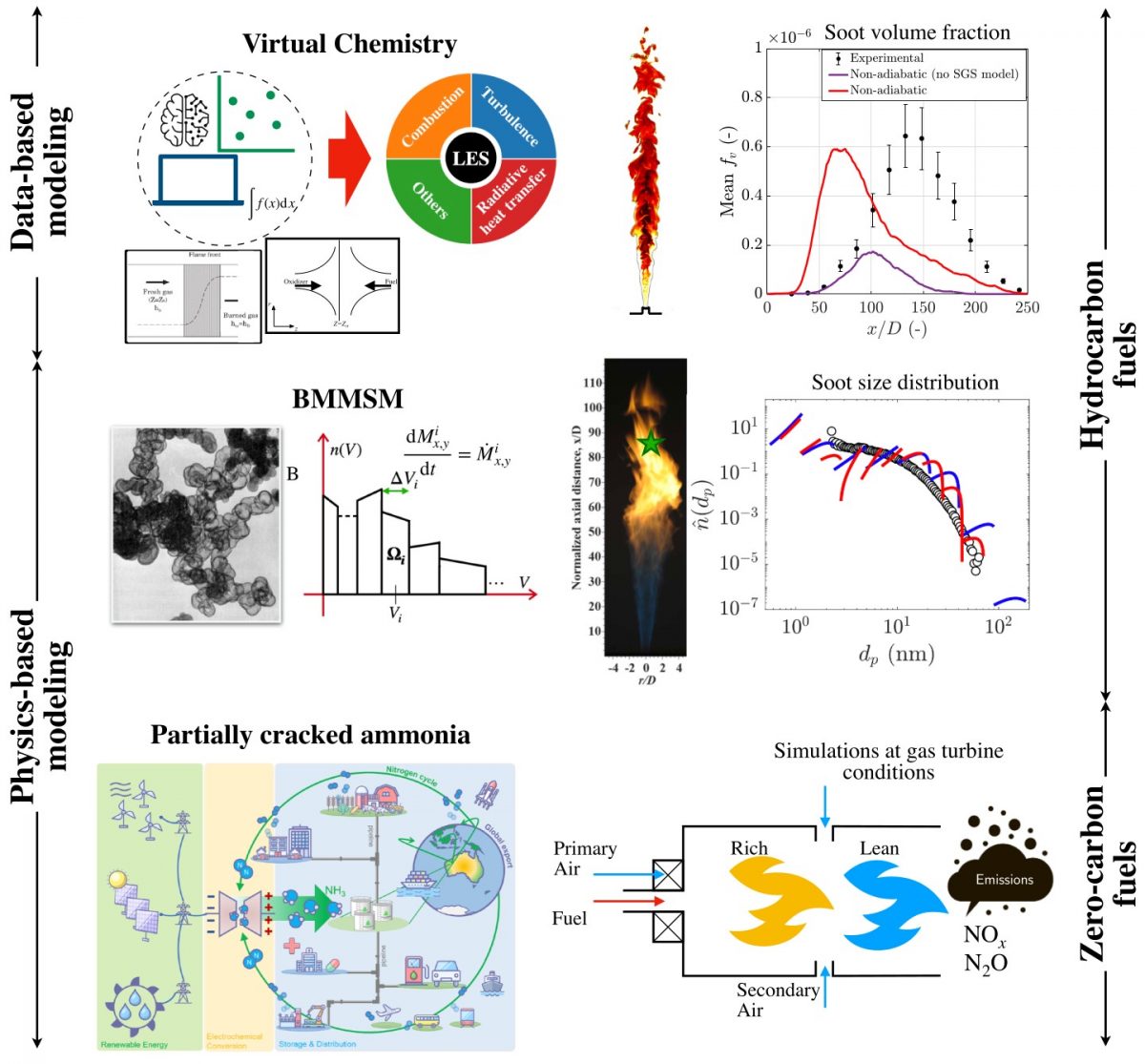

The need to regulate greenhouse gas emissions has driven the search for clean and efficient energy solutions, requiring the integration of alternative fuels for a sustainable future. Alternative fuels (hydrogen, ammonia, biofuels, Sustainable Aviation Fuels, SAF) present different attributes with respect to traditional hydrocarbon fuels, such as burning rate and pollutant emissions, leading to new challenges. Undertaking these tasks involves the need for numerical combustion modeling and, due to the complexity of such systems, sophisticated strategies for efficient simulations. In this context, soot formation has been considered a major challenge due to its complex nature, and that would still be present in future energy systems (e.g., biofuels or SAF). As a first part of the presentation, I will introduce two different approaches of soot modeling. The first one is the so-called virtual chemistry approach, developed at EM2C. This method involves the creation of a global mechanism comprising virtual species and reactions. Machine learning algorithms optimize the thermodynamic properties and kinetic rate parameters of these virtual components. This methodology primarily focuses on capturing essential sooting flame properties such as temperature, laminar flame speed, radiation, and soot volume fraction. It has been adapted for the simulation of turbulent sooting flames using Large Eddy Simulation (LES), which I will exhibit with further details. The second approach, developed more recently at Princeton, is called Bivariate Multi-Moment Sectional Method (BMMSM). BMMSM is designed for computationally efficient tracking of soot size distribution in turbulent reacting flows. It combines the sectional method with the method of moments to characterize the size distribution. Notably, BMMSM employs fewer soot sections compared to traditional sectional models while considering three volume-surface moments per section to account for soot’s fractal aggregate morphology. Then, I will present LES results of the evolution of the soot size distribution in a turbulent nonpremixed flame. In the second part of this presentation, I will present insights into the emissions from the combustion of other candidate fuels, including recent work on the use of ammonia, determining if they exhibit sufficiently low levels compared to current fuels, while minimizing societal and environmental impacts. Then, I will present simulation results of partially cracked ammonia combustion, which are representative of the design of future gas turbines for power production, to identify the mechanisms behind the production of other carbonless greenhouse gases, such as N2O, and pollutants, such as NOx, and how they might compare with the emission trends associated with traditional hydrocarbon fuels. Finally, a few points highlighting that the needs and challenges in combustion science are evolving will be discussed.

Catégorie d'événements : Fluides, Thermique et Combustion

La diffusion Rayleigh pour le diagnostic optique des écoulements, principes et applications pour l’aéroacoustique et la turbulence thermique

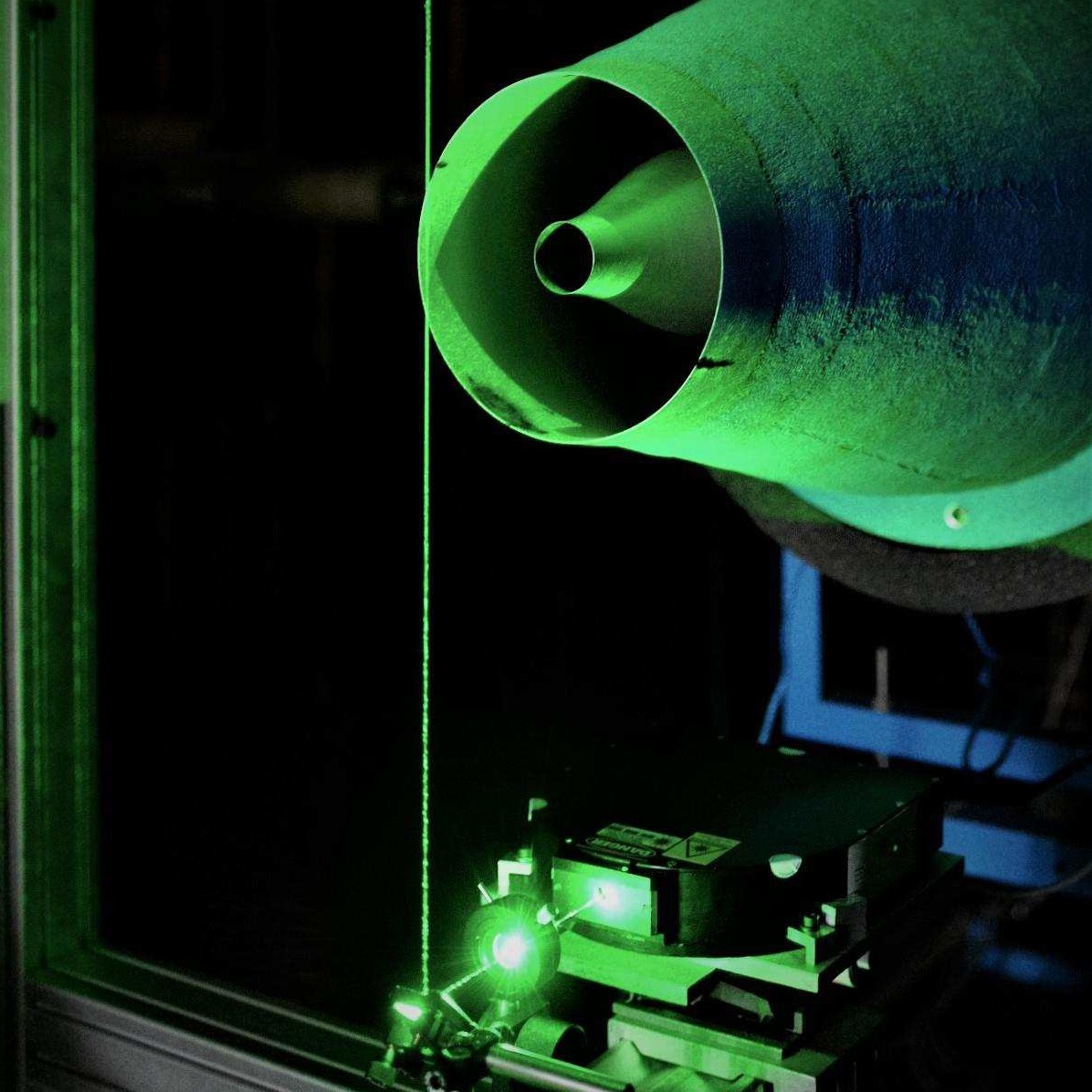

La diffusion Rayleigh est le mode de la diffusion d’une onde électromagnétique par des particules petites devant la longueur d’onde considérée. Dans le contexte du diagnostic optique des écoulements, l’onde est généralement issue d’une source laser dans le visible, et les particules sont les molécules constituantes du gaz. Il n’y a donc aucun traceur non intrinsèquement déjà présent dans l’écoulement, ce qui en fait une méthode particulièrement adaptée aux écoulements rapides. Un panel de techniques découle de l’étude de la lumière diffusée par les molécules. Nous nous intéresserons en particulier à l’intensité de celle-ci qui permet de mesurer la masse volumique locale d’un écoulement, avec deux applications.

– l’étude des corrélations entre les fluctuations hydrodynamiques dans un jet à Mach 0.9 et le rayonnement acoustique vers l’aval.

– la mesure des fluctuations de température des fréquences de plusieurs kilohertz dans le sillage d’un barreau chauffé (Mach ~0.01).

Nous évoquerons aussi les méthodes basées sur l’analyse spectrale du rayonnement, qui permettent en principe d’obtenir la température et la vitesse locale en plus de la masse volumique.

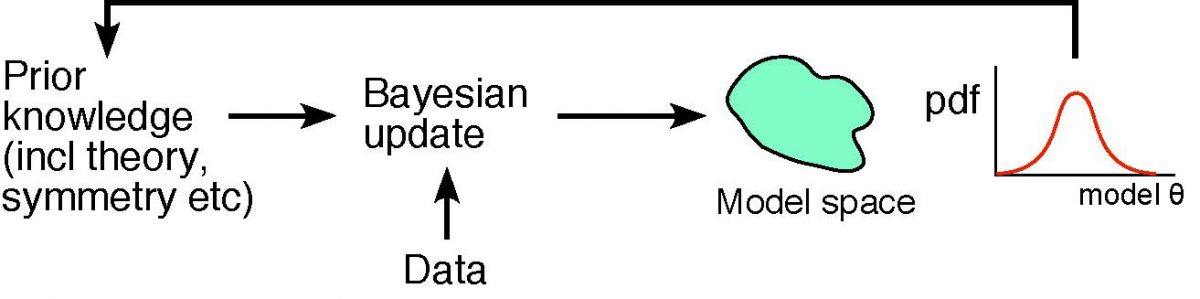

Bayesian Inference for Construction of Inverse Models from Data

This talk considers the inverse problem y=f(x), where x and y are observable parameters, in which we wish to recover the model f. Examples include dynamical systems and combat models with y=dx/dt and x=parameter(s), water catchments with y=streamflow and x=rainfall, and groundwater vulnerability with y=pollutant concentration(s) and x=hydrological parameter(s). Historically, these have been solved by many methods, including regression or sparse regularization for dynamical system models, and various empirical correlation methods for rainfall-runoff and groundwater vulnerability models. These can instead be analyzed within a Bayesian framework, using the maximum a posteriori (MAP) method to estimate the model parameters, and the Bayesian posterior distribution to estimate the parameter variances (uncertainty quantification). For systems with unknown covariance parameters, the joint maximum a-posteriori (JMAP) and variational Bayesian approximation (VBA) methods can be used for their estimation. These methods are demonstrated by the analysis of a number of dynamical and hydrological systems.

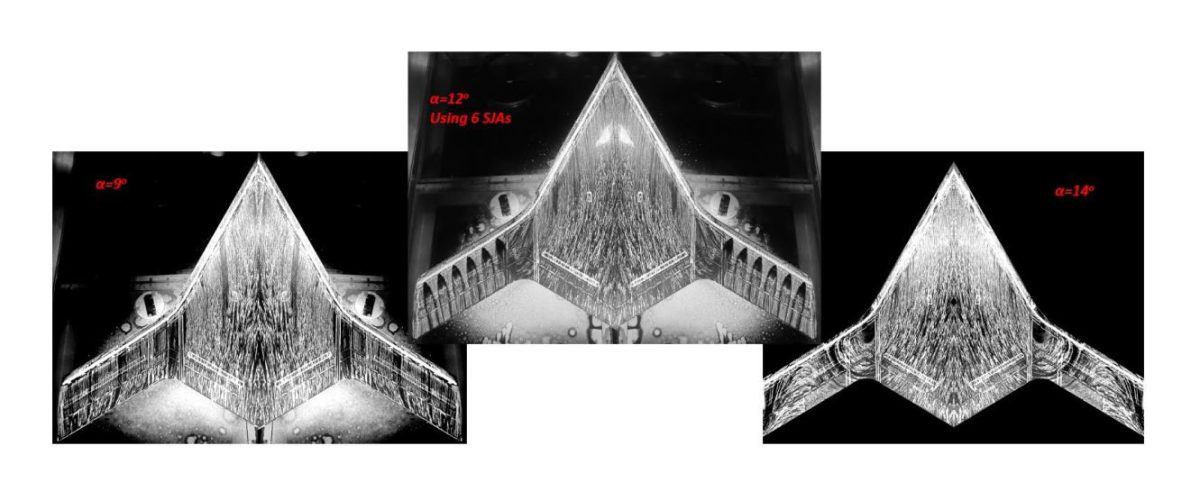

ON THE USE OF SUPERSONIC JET-CURTAINS FOR CONTROL OF MOMENTS ON TAILLESS AIRCRAFT

The purpose of this study is to explore the replacement of conventional moving control surfaces on a typical tailless aircraft model at high subsonic cruise speeds. Since the efficacy of sweeping jet actuators was only demonstrated at low speeds, the current test considered their potential replacement by Supersonic Steady Jets (SSJs). It was shown that even a single jet properly located and oriented may outperform an array of actuators whose location and orientation did not take into consideration the changing local flow conditions. The test article chosen was the SWIFT model that represents a typical blended wing-body configuration of a tailless aircraft. It was selected because it was tested extensively using Sweeping Jet Actuators (SJAs). When a single supersonic jet designed for Mn=1.5 was used to control thepitch it was able to increase the trimmed lift by approximately a factor of 3. The power it consumed was not necessarily smaller than an array of 6 SJAs but it provided other advantages that are discussed in the paper. Large yawing moments could be provided by other SSJs that were not encumbered by large rolling moments. These tests proved that the momentum input is but one of many parameters controlling the flow. It was replaced by power coefficients that are unambiguously measured and are capable of comparing various modes of actuation.

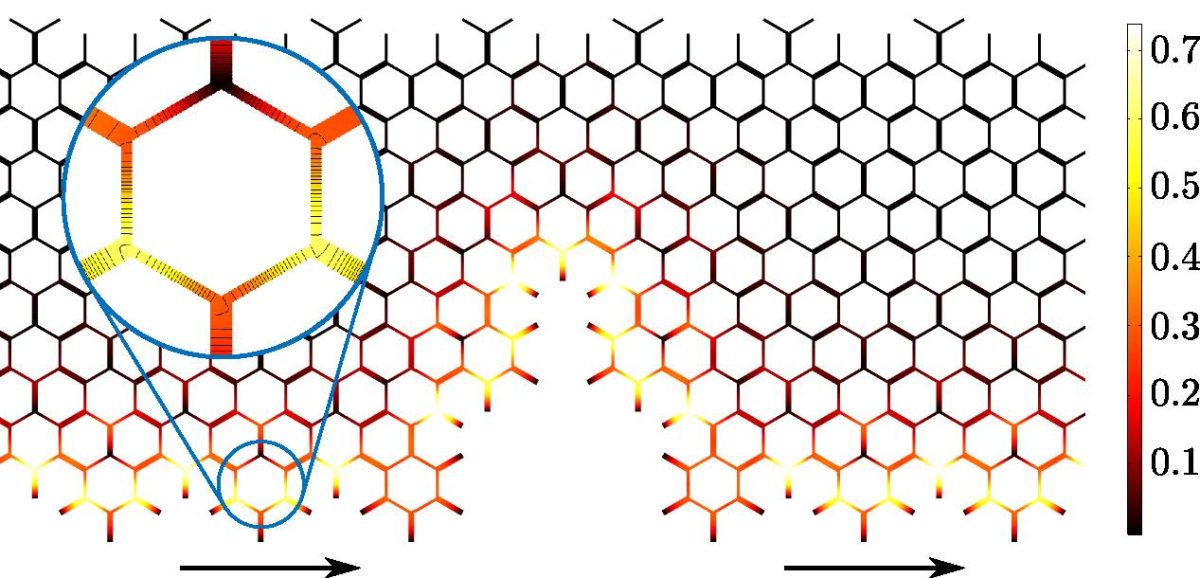

Scattering of topological edge waves in Kekule structures

Kekule structures are graphene-like lattices, with a modulation of the intersite coupling that preserves the hexagonal symmetry of the system. These structures possess very peculiar properties. In particular, they display topological phases manifested by the presence of edge waves propagating on the edge of a sample. We will discuss the extraordinary scattering properties of these edge waves across defects or disorder. We will also discuss how to realize Kekule structures in acoustic networks of waveguides

Computational fluid dynamics software: topology optimisation, uncertainty quantification and machine learning recent development.

Computational fluid dynamics software have gained in popularity the past few decades. They use numerical analysis and data structures to analyze and solve problems that involve fluid flows. This remains a field under development, with ongoing research to improve the accuracy and speed of complex simulation scenarios.

New methods such as topology optimisation have been recently added to CFD software, paving the path to design highly efficient engineering components. The design of an engineering component is updated using a mathematical algorithm to maximise a performance value while being subject to specific boundary conditions. This seminar will explain the basis of topology optimisation and it will go through a specific application for valve and robust optimisation. CFD software enables a first prediction before relying on experimental data to confirm an engineering component performance. Discrepancies are a common occurrence, thus accuracy of experimental data and CFD software can be improved by the utilization of uncertainty quantification. This will be shown on a cantilever beam test case, where the exceedance probability is estimated while experimental data are subject to epistemic and aleatory uncertainties. Accuracy of models prediction in CFD software can also be improved by using data from higher fidelity models. Machine learning algorithms have been gaining in popularity in the past couple years. They offer the opportunity to leverage data coming from high fidelity simulation to develop models for low fidelity simulation. This enables low fidelity to gain in accuracy while keeping a low computational cost. However, dealing with large data can be challenging in itself, which is going to be shown on an AI data driven turbulence model test case, where data from DNS and LES are used to build a turbulence model for RANS simulations.

QSQH theory of scale interaction in near-wall turbulence: the essence, evolution, and the current state

The Quasi-Steady Quasi-Homogeneous (QSQH) theory describes one of the mechanisms by which the large-scale motions active outside the viscous and buffer layers affect (‘modulate’) the turbulent flow inside these layers. The theory presumes that the near-wall turbulence adjusts itself to the large-scale component of the wall friction. Formulated in a mathematically rigorous form, the theory allows nontrivial quantitative predictions. The talk will describe the basics the theory and its methods, its current state, and a new tool making the application of this theory easy. Examples of applications and comparisons will be given.

Journée François Lacas des doctorants en combustion

Journée François Lacas des doctorants en combustion

Le jeudi 9 mars 2023, l’institut PPRIME accueillera la journée François Lacas dans les locaux de l’ISAE-ENSMA. Lors de cette journée des doctorants en combustion, des étudiants en thèse en provenance de la France entière sont conviés pour présenter leurs dernières avancées et échanger en présence de spécialistes de la discipline issus des différents laboratoires, organismes de recherche et groupes français. Cette journée, co-organisée par l’institut PPRIME et le Groupement Français de Combustion (GFC) sera également l’occasion de réaliser l’assemblée générale du GFC, section Française du Combustion Institute.

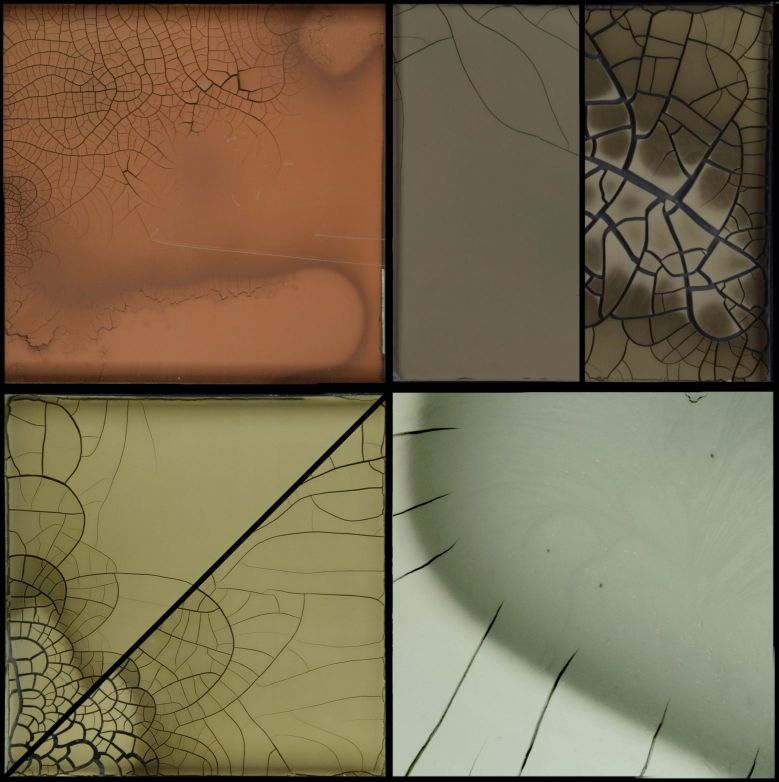

Morphogenèse de réseaux spatiaux : l’exemple des craquelures dans l’argile

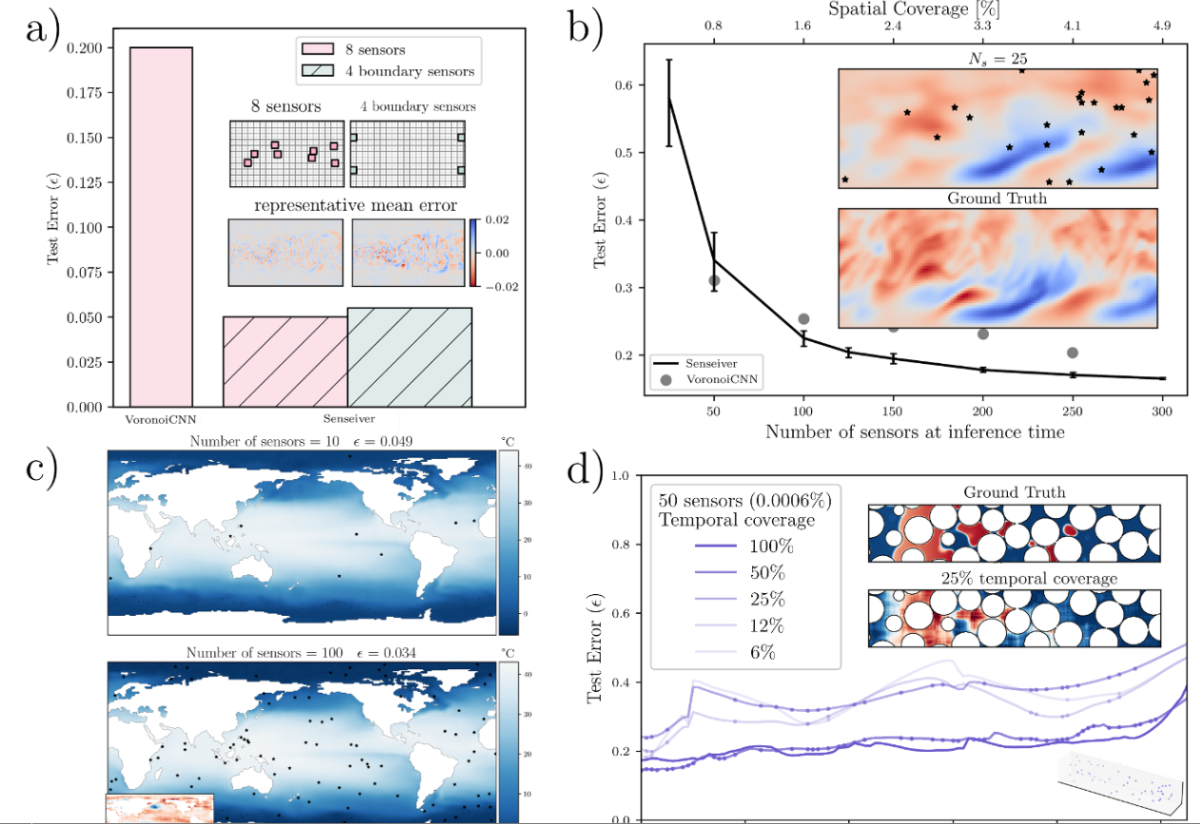

Sparse sensing and Interpretable machine learning for surrogate modeling of complex systems

Abstract: Several problems in earth sciences and engineering arise from complex systems governed by nonlinear PDEs. There are two major challenges in this area: a) The need for a computationally efficient, rapid modeling capability to assist in design and forecasting, i.e. surrogate modeling, and b) The growing need to exploit sparsely sampled sensor and measurement data, to estimate the state of the full complex system i.e. sparse sensing. Both these challenges are characterized by lack of a robust theoretical or analytical formulation, unlike numerical simulation of partial differential equations. Data-driven techniques like machine learning have shown promise, but there is considerable progress to be made for applications. In this talk, I will present some recent advances our team at Los Alamos National Laboratory has made in these areas. I will discuss our sparse sensing learning approaches that can scale to large datasets in diverse applications. Additionally, I will present a few promising directions in the area of interpretable machine learning for surrogate modeling of PDEs, with a focus on fluid dynamics. We demonstrate that methods that reduce excessive dependence deep neural networks and instead achieve superior accuracy by a tighter coupling with the governing equations. I will also outline the path ahead and opportunities for collaborative research.